Section: New Results

Human-Computer Partnerships

Participants : Wendy Mackay [correspondant] , Jessalyn Alvina, Marianela Ciolfi Felice, Carla Griggio, Shu Yuan Hsueh, Wanyu Liu, John Maccallum, Nolwenn Maudet, Joanna Mcgrenere, Midas Nouwens, Andrew Webb.

ExSitu is interested in designing effective human-computer partnerships, in which expert users control their interaction with technology. Rather than treating the human users as the 'input' to a computer algorithm, we explore human-centered machine learning, where the goal is to use machine learning and other techniques to increase human capabilities. Much of human-computer interaction research focuses on measuring and improving productivity: our specific goal is to create what we call 'co-adaptive systems' that are discoverable, appropriable and expressive for the user. Jessalyn Alvina, under the supervision of Wendy Mackay, successfully defended her thesis, Increasing The Expressive Power of Gesture-based Interaction on Mobile Devices [38], on this topic.

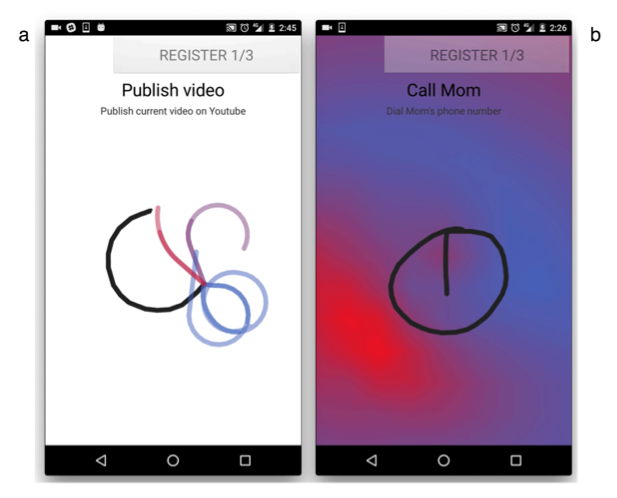

We are interested in helping users create their own custom gesture-based commands for mobile devices. This raises two competing requirements: gestures must be both personally memorable for the user, while reliably recognizable by the system. We created two dynamic guides [22], Fieldward and Pathward, which use progressive feedforward to interactively visualize the “negative space” of unused gestures. The Pathward technique suggests four possible completions to the current gesture, whereas the Fieldward technique uses color gradients to reveal optimal directions for creating recognizable gestures (Figure 2). We ran a two-part experiment in which 27 participants each created 42 personal gesture shortcuts on a smartphone, using Pathward, Fieldward or No Feedforward. The Fieldward technique best supported the most common user strategy, i.e. to create a memorable gesture first and then adapt it to be recognized by the system. Users preferred the Fieldward technique to Pathward or No Feedforward, and remembered gestures more easily when using the technique. Dynamic guides can help developers design novel gesture vocabularies and support users as they design custom gestures for mobile applications.

|

We are also interested in letting users use simple gestures to generate commands on a mobile device. CommandBoard [14] offers a simple, efficient and incrementally learnable technique for issuing gesture commands from a soft keyboard. We transform the area above the keyboard into a command-gesture input space that lets users draw unique command gestures or type command names followed by execute (Fig 3). Novices who pause see an in-context dynamic guide, whereas experts simply draw. Our studies show that CommandBoard's inline gesture shortcuts are significantly faster (almost double) than markdown symbols and significantly preferred by users. We demonstrate additional techniques for more complex commands, and discuss trade-offs with respect to the user’s knowledge and motor skills, as well as the size and structure of the command space. We filed a patent for the CommandBoard technique.

|

In the context of an art-science project with the n+1 theater group and the Théâtre de l'Agora d'Evry, we created an interactive installation that was exhibited at Fête de la Science, at the Agora d'Evry for an entire month, and at the Festival Curiositas. We were interested in understanding what makes public art installations interactive, so that they are engaging both for the individual user and the surrounding public. More specifically, we experimented with the principle of 'shaping' from behavioral psychology to create a human-computer partnership: an animated Santa character mirrors the exact movements of the user, but also offers different types of reinforcing or punishing feedback that in turn shapes the user's behavior (Figure 4). From the user's perspective, the user is always in control. Yet, from the system's perspective, the user moves through successive approximations to a specific desired behavior. Thus, we explore the dynamic nature of shared control between users and technology.

|

Finally, with BIGnav [20], we experimented with a different kind of partnership. BIGnav is a new multiscale navigation technique based on Bayesian Experimental Design where the criterion is to maximize the information-theoretic concept of mutual information, also known as information gain. Rather than simply executing user navigation commands, BIGnav interprets user input to update its knowledge about the user’s intended target. It then navigates to a new view that maximizes the information gain provided by the user’s expected subsequent input. BIGnav creates a novel form of human-computer partnership, where the computer challenges the user in order to extract more information from the user's input, making interaction more efficient. We showed that BIGnav is significantly faster than conventional pan and zoom and requires fewer commands for distant targets, especially in non-uniform information spaces. We also applied BIGnav to a realistic application and showed that users can navigate to highly probable points of interest on a map with only a few steps.